CNetworkQueue - SW (Nov 2006 to Mar 2008)

RC3

Latest Changes and Updates

- SW NQv1RC21 -> NQv1RC3 (April 2008)

- SW NQv1RC2 -> NQv1RC21 (March 2008)

- SW upgraded to NQv1RC2 (Jul 2007 to Aug 2007)

- SW upgraded, bugfixed NQv1RC2 (Aug 2007 to Oct 2007)

Introduction

The network queue is a middle layer software used to etablish communication between two our more network devices. The main approach is to have a fast and easy way to connect different embedded computing devices together or connect them to a control system server.Overview

The wide implementation of embedded systems with direct Ethernet / IP interface at particle accelerators is ongoing. A defined way needs to be specified to exchange information between embedded systems with Ethernet/IP interface and matured control system protocols. As earlier steps to port control system protocol kernels directly to the embedded system were finally not successful, a defined, stable and portable way of exchanging information between full-featured control system servers and embedded systems with respect to endianess, RISC/CISC architecture, ease of use, portability and stability was needed. An agreement was done to implement a so-called Network Queue to transport "messages" from local peer to remote peer and vice versa. The following keynotes outline the basics of the network queue implementation:

- some ideas and concepts taken from SNMP protocol

- direct peer to peer connection (UDP unicast)

- one message must fit in a UDP single packet only (MTU defines net data size); if bigger messages are required, only a subset of platforms can be used

- decoupled send/receive behaviour via two additional tasks / threads

- transparent endianess conversion, marshalling and network distribution

- 1:n and m:n peer support

- source code is designed to compile and link warning (

-Wall) and error free - platform-independent C++ source code (avoided STL code after prototype failure)

- platform dependency is maintained via wrapper Platform.h/cpp for any supported platform

- platforms tested and supported: win32, linux32, linux64, solaris, NIOS2-LWIP-uc/OS-II, NIOS2-Niche-uc/OS-II

- statistics counting while running (to detect possible problems)

The first planned use cases are Neutron Detector data transmission, Klystron Interlock and Mover/Rotational Steerer business. It should be pretty easy to adopt the source code to other use cases.

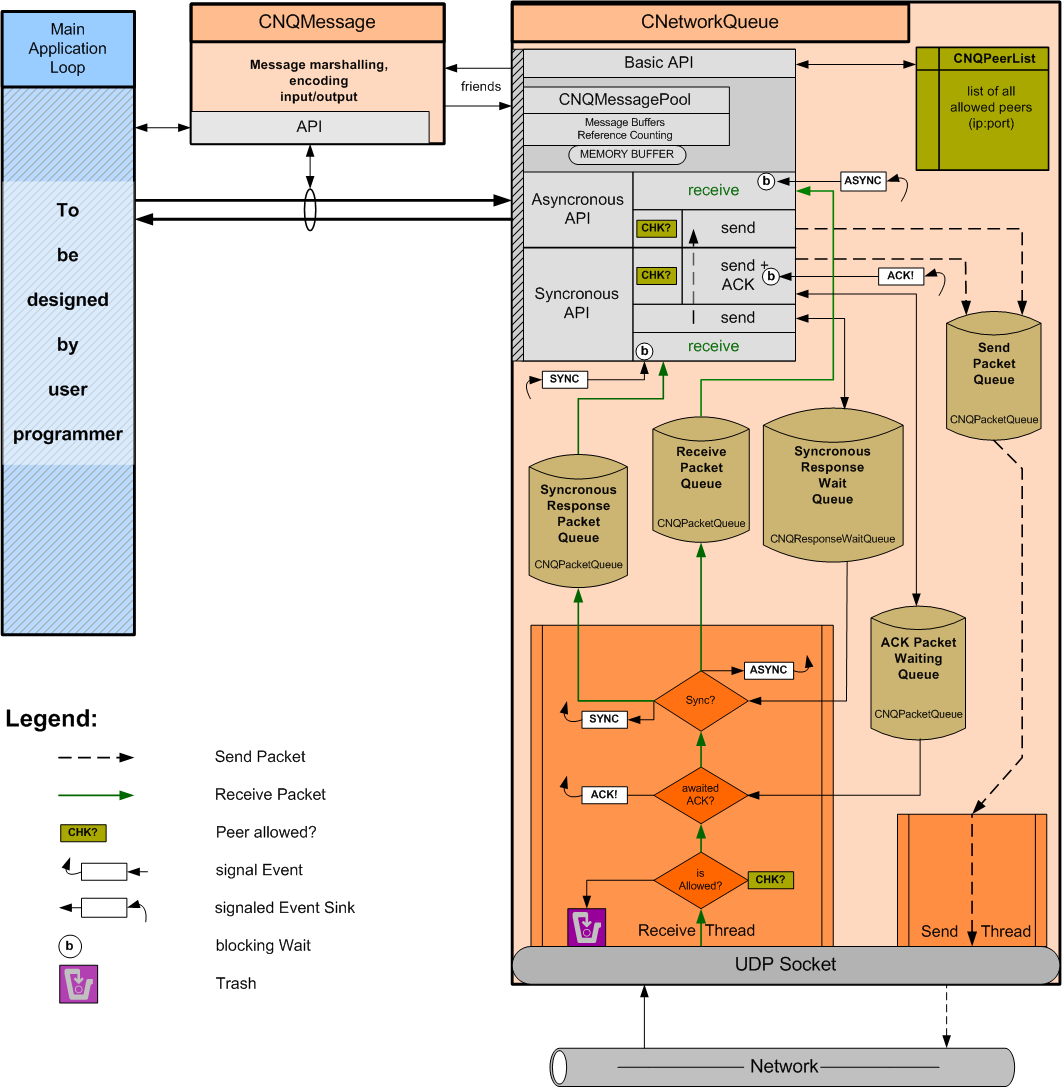

Architectural Overview

-Wall) and error free on any supported platform. Platform-dependencies are maintained through "wrapper files" Platform.cpp and Platform.h. Inside, the required operating system functionality is implemented. Namely socket-functionality, task resp. thread management, 'counting up to N' semaphores, mutex, error handling, sleeping, data types and endianess changers.Network transport, marshalling

On the network, the UDP packet always contains little endian data. During encoding a logical message, packet marshalling is done. Directly inside the receive thread at the remote peer, the packet is unmarshalled and the logical message is decoded. By this method, marshalling and network transport is fully transparent to the user. On marshalling, the logical message is put into the physical packet layout (little endian) and wrapped by a packet header and packet tail. This wrapping contents will be checked on receive at the remote peer to make sure only valid packets are delivered further. On UDP sockets, packet filtering is implemented if a packet is received from an unknown peer. If a packet is going to be send to an unknown peer, it is rejected. Additional peers have to be told to the Network Queue class instance. To draw another bound-line to secure the network transport, the packet marshalling is done to roughly check the integrity of the network packet.Class Layout

Class (and Class Relationship) Overview

The user code interfaces with the Network Queue API by two classes. First CNQMessage, which abstracts message creation, encoding, marshalling, decoding and disposal. Second CNetworkQueue, which is the core class of the Network Queue. CNQMessage and CNetworkQueue are friends, the are able to access each others private variables without violation by performance reasons. The CNetworkQueue API consists of several parts:

- basic API (creation, closing, error handling, list of allowed remote peers)

- CNQMessagePool (pooling of data storage locations for n CNQMessage instances to avoid dynamic memory handling)

- asynchronous API (send a message immediately (fire and forget), receive an answer that is waiting)

- synchronous API (request to and response from remote peer simultaneously, sending a message with acknowledge and timeout)

The basic API provides functionality to open/create a local Network Queue endpoint and to cleanly close it. Moreover, methods are provided to add or remove a remote peer to/from the list of allowed peers. Only peers that are once registered in this list are able to talk to this local endpoint. A peer is defined by a unique combination of IPv4-address and port number. In addition, methods for extended error information and to get statistical information, are provided.

Inside CNetworkQueue there exist a pool of a defined number of CNQMessage instances, which can be requested, referenced and put back to the pool finally. This functionality is provided by API methods. The reason of this pool is to minimize dynamic memory allocation, saving cpu cycles on slow embedded devices and stack segment consumption. By making use of this API part, the user programmer can request an instance to a CNQMessage object in order to fill it with contents that need to be transferred to a remote peer. Afterwards, the CNQMessage instance can be put into either synchronous or asynchronous API in order to send it out. It should be noted that on sending and receiving a CNQMessage instance using synchonous or asynchronous API methods, it is important to put these instances back to the pool after finishing using them. Otherwise the pool might get empty sometimes. The number of CNQMessage instances in the pool is adjustable at compile time by a constant inside NQDeclarations.h.

Inside asynchronous API, methods are provided to send (fire and forget) an already constructed CNQMessage object. Moreover, a method is provided to probe (with immediate return) whether a new CNQMessage was received by any remote peer. As third method, one can check whether a new CNQMessage was or will arrive soon from remote peer (probe with timeout). The timeout blocking is depicted by a b surrounded by a circle (see legend).

The syncronous API consist of two methods. The first one sends a user-defined CNQMessage out and awaits an acknowledge packet (ACK) from the remote peer in a defined timeout period. Execution flow only continues if ACK was received successfully or the timeout expired. It must be noted that even if ACK was not received locally the original CNQMessage might be received succesfully at the remote end and just the ACK packet was thrown away somewhere. The second method of synchronous API provides functionality in order to emulate something like a function call. One can send a 'request'-tagged CNQMessage and wait for a 'response' tagged CNQMessage that was send especially as answer to the original 'request' message. There is a timeout period which must be specified at the function call in order not to break execution for an unlimited amount of time.

Inside of the CNetworkQueue class, a couple of packet queues are used to decouple/buffer sending and receiving from calls to the API of the class. Inside the queues, CNQMessage instances will be temporarily placed until they are required by the other end.

Before sending CNQMessage contents out, it is checked whether the destination is in the list of allowed peers, depicted by CHK! markers in the diagram. After this is successfully done, the message is placed in the send queue. The decoupled send thread/task will then send out the accumulated packets in the send queue as fast as it can.

On receiving of UDP packets, which happens inside receive thread, they are examined to verify that the sender (ip:port) is in the list of allowed peers. If it is not, the packet is thrown away. It must be noted that also any packet is thrown away which contains neither a valid encoded CNQMessage nor an ACK packet. In the next two stages, the packet is examined whether

- it is an ACK packet and a blocking send() is waiting for it

- it is a response and the original request is waiting for it

If any of these rules match, the packet is treated special and will not be guided through to the asynchronous receiveing packet queue. For an ACK, the ACK packet queue is examined and if a proper waiting blocking send() was found, its semaphore will be signalled. If nothing could be found, a warning message will be printed out in debug mode and the ACK packet will be thrown away. For a so-called awaited response, the packet is placed as a CNQMessage instance into a dedicated queue and the semaphore of the blocking function-call is signalled to unlock the block.

Directory Contents

- doc - Documentation

- example - examples

- linux - nq_system.h and main for linux (including makefiles)

- nios-lwip-nios2sdk51 - Altera Nios2, LWIP and ucOS/II example project (Nios2 5.1 SDK and IDE)

- nios-niche-nios2eds61 - Altera Nios2, nicheStack and ucOS/II example project (Nios2 6.1 SDK and IDE)

- solaris - nq_system.h and main for solaris (including makefiles)

- win - nq_system.h and main for Windows, MS Visual C++ 6 project and workspace, Doxygen config

- GeneralMain.cpp - main test application (usable and used on any platform)

- include - include (header) files for any platform, also contains global include file "nq.h"

- platform - platform dependent code (usable for any supported platform)

- src - sourcecode for all worlds

Source code explanation

The sourcecode is well inline-commented. API documentation is directly incorporated into the appropriate source and header files using Doxygen commands. Regarding platform-based documentation, Platform.cpp and Platform.h are reasonable inline-documented. It should be noted that detailed Doxygen documentation is only available for API parts of the Network Queue.Source code naming conventions

In order to easier learn and understand the structure and behaviour of sourcecode, certain assumptions shall be made through all source files:- Platform-dependent functions always start with "platform", e.g. "platformSleepMilliseconds"

- source-code files that are platform-independent start with uppercase letter, e.g. "Declarations.h"

- platform-dependent files start with a lowercase letter, e.g. "nq_system.h"

- CNQMessage contains uncommonly-tagged data members that will be directly encoded to/decoded from packets

- opt -> "optAuthorisationID", optional parameter, use it for your own pleasure (user programmer)

- mandatory -> "mandatoryLogicalPacketType", parameter that must be set to any proper value, will be used internally, but can also be used by user programmer for branching

- internal -> "internalPacketIndex", will be only used internally and in packets

- classes start with a big "C" letter, e.g. CNetworkQueue

Platform Specifics

Windows-platform

The implementation of the Network Queue started on this platform. During development, MS Visual C++ v6 SP6 was used. Threads, semaphores and mutex are implemented using raw win32 API.Linux-platform

The implementation of the Network Queue was done on 64-bit platform (SL4). GCC v.3.2.3 was used as compiler and linker. Posix API was used for threads (decoupled sending and receiving), semaphores and mutex. The required "counting up to max" semaphore is implemented by hand because such element is not directly supported by Posix API.NIOS2-LWIP-ucOS/II platform

The implementation of the Network Queue for Nios2 platform was done using Nios2 SDK and IDE v5.1 with latest patches as of January 2007. The Nios2 SDK v5.1 includes LWIP v1.1.0 and ucOS/II v2.77 . The testing was done on a Klystron Interlock reference board (CntrlM #5) which embeds a Altera Nios2 softcore processor running on an Altera Cyclone II FPGA.As for the implementation details for Nios2 platform, the mutex element was created as a binary semaphore. A dedicated Mutex object is available in ucOS/II, but due to breaking source-dependency, implementation using this type was not meaningful. The "counting up to max" semaphore is implemented by hand due to lack of support for this element in ucOS/II 2.77 API.

Tasks (using ucOS/II and LWIP API) are used as physical tool to implement decoupled send and receive functionality. Platform-independent semaphores and mutex are implemented using ucOS/II API.

- Note:

- LWIP tasks stack size (set inside Nios2 IDE System Library Properties) should be at least 65536 bytes (16384 DWORDS)! According to prototyping and testing period, a value less than is size may cause irregular behaviour and hardly-reproducable stack-critical bugs that are very hard to debug.

NIOS2-LWIP-ucOS/II platform

The second implementation of the Network Queue for Nios2 platform was done using Nios2 SDK and IDE v6.1 with latest patches as of October 2007. The Nios2 SDK v6.1 includes InterNiche NicheStack and ucOS/II v2.77 . The testing was done on a Klystron Interlock reference board (CntrlM #5) which embeds a Altera Nios2 softcore processor running on an Altera Cyclone II FPGA.As for the implementation details for Nios2 platform, details regarding ucOS/II can be found in the last section. The second implementation is an adaption to InterNiche nicheStack for Altera Nios2. Alter decides to deprecate the LWIP stack and the design guideline is to use nicheStack from now on. Changes to nicheStack were rather straightforward. Two special findings need to be known and understood:

- The nicheStack architecture as delivered with the Nios2 SDK did not support a parameter passed to a newly created task. This prevents proper usage of the Network Queue. As the stack was delivered as open-source, appropriate changes were made and documented. Please take a close look at the "doc"umentation directory and especially inside niche_patch.txt file.

- The nicheStack (still true for any Nios2 v6 and v7-Release, patch is announced to be included in v8) implementation contains a heavy realtime-OS related bug which can trigger somewhen. According to Murphy this always happens when it is not expected at all. While modifying usOS/II task structures to support "round robin scheduling" not directly implemented in usOS/II, a critial_section wrapping was forgotten. One has to manually patch the appropriate files as documented in niche_patch.txt file. An appropriate source snapshot was put into patches subdirectory of the documentation.

Regarding performance, nicheStack works much better than LWIP. When doing estimations with the Altera-Nios2-based board mentioned above, the speed-factor is about 1:4 to 1:6.

1.5.5

1.5.5